By Prashant Vora, Senior Practice Director – Autonomous Driving, KPIT Technologies Ltd.

Cameras, Radars, Lidars, Ultrasonic sensors are the various kinds of sensors used to develop vehicles equipped with Advanced Driver Assistance Systems (ADAS) and Autonomous Vehicles (AV).

Sensors are the ‘eyes’ of an autonomous vehicle (AV) and they help the vehicle perceive its surroundings, i.e., people, objects, traffic, road geometry, weather, etc. This perception is critical, and it ensures that the AV can make the right decisions, i.e., stop, accelerate, turn, etc.

As we develop AVs with higher levels of autonomy (L2+ and above) where autonomous features replace driver tasks, multiple sensors are required to understand the environment correctly.

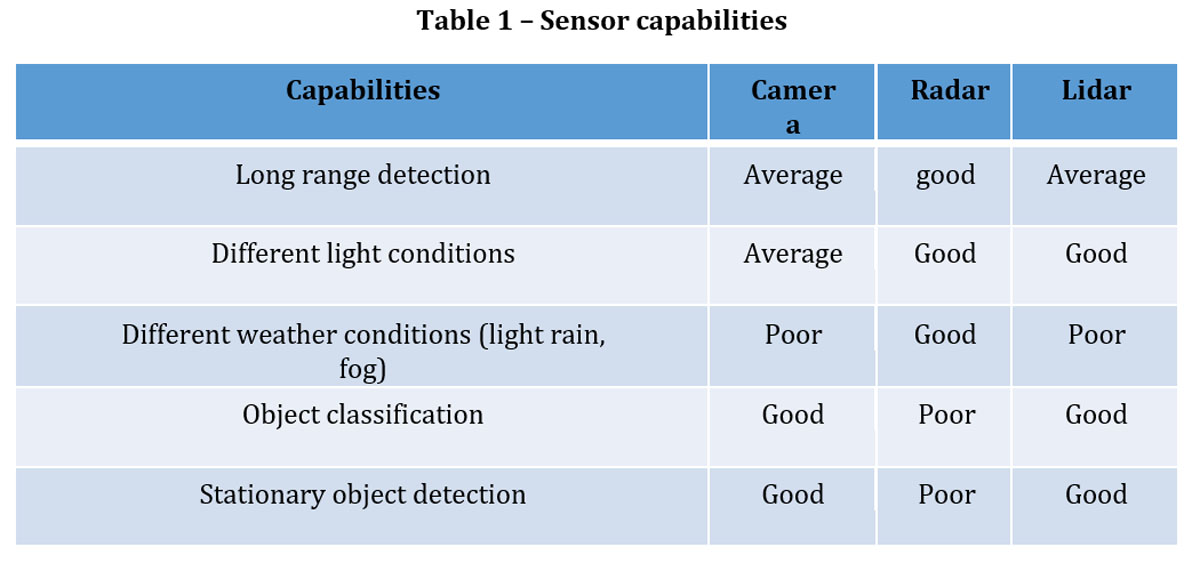

But every sensor is different and has its own limitations for e.g., camera will work very well for lane detection or object classification whereas a radar may provide good data for long range detection or in different light conditions.

Thus, in an AV data from multiple sensors is fused using Sensor Fusion techniques to provide the best possible input so that the AV takes the correct decisions (brake, accelerate turn, etc.)

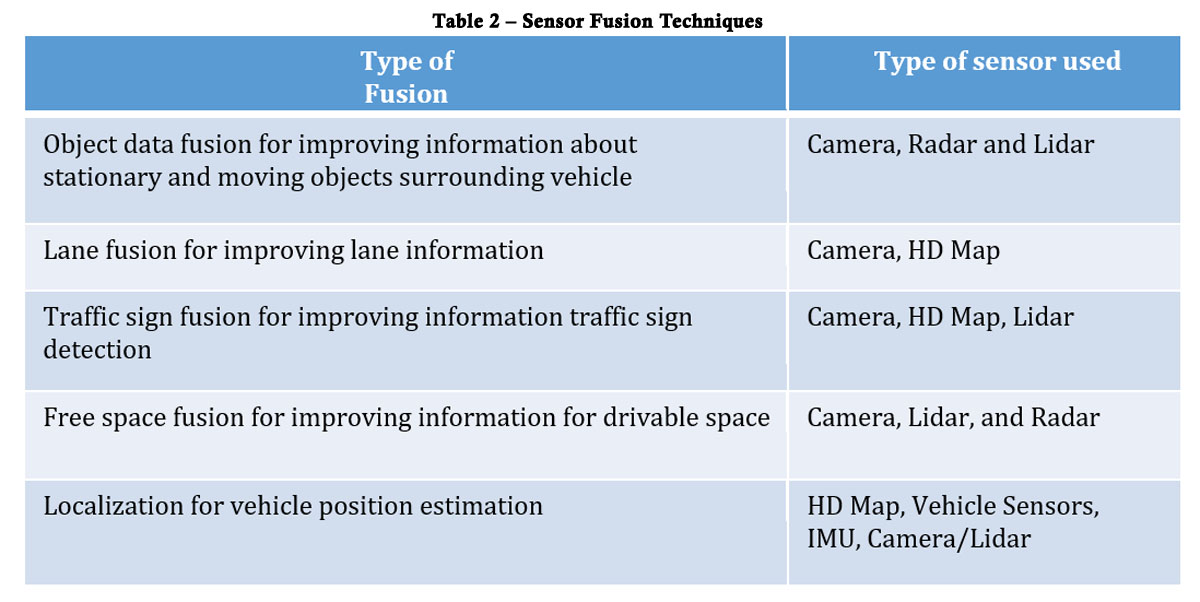

Sensor Fusion improves the overall performance capability of an Autonomous Vehicle and there are multiple fusion Techniques and, which one to use depends on the feature Operation Design Domain (ODD)

Below are the few examples of different type of fusion techniques and depending on the Level of autonomy of the AV one or multiple sensor fusion techniques will be used for example: for Level 2 feature development only object data, lane fusion, traffic sign fusion may be used as per feature requirement but for Level 3 and above all the techniques of Fusion may be required.

The complexity of the environment and features specifications drive what kind of fusion strategy and what type of fusion requirement needs to be worked out.

Executing Sensor fusion is a complex activity, and one should account for numerous challenges:

- Different sensors give output data at different sampling rate (50 msec, 60 msec, etc.)

- There could be multiple detections by the sensor from same objects (ex: large truck might give multiple reflections from radar)

- Sensors also detect false or miss detection (Camera may miss to detect object/lane at night, dusk time, etc.)

- Objects might be in the blind zone of sensors for some time or objects might dynamically move in the blind zone

- Output accuracy also varies from different sensors at different FOV region

- Sensors are mounted at different position in vehicle

- Sensor performance also varies in different environment conditions

- Detection confidence could be lower from sensor

These challenges may become more complex as we develop vehicles with higher level of autonomy. Multiple sensors may be needed to try and get closer to 360-degree coverage and avoid blind spots. This also generally a tradeoff of cost Vs feature requirement. The placement of sensors is also crucial to reduce blind zones to achieve the best performance by not compromising safety requirements

Additionally, the level of accuracy of every sensor is different and managing the data accuracy of each sensor is critical for the final output. Thus, it is very important to understand the problem statement and the environment to account for these challenges

To ensure high levels of accuracy with Sensor Fusion, one must ensure right KPIs and robust design:

- Ensuring that all high-level KPIs are in place, i.e.

- Single track for each object

- No false or miss object detection

- Overall accuracy is improved

- Provide confidence level for each output

- Estimate output in sensor blind zone

- Higher accuracy through design:

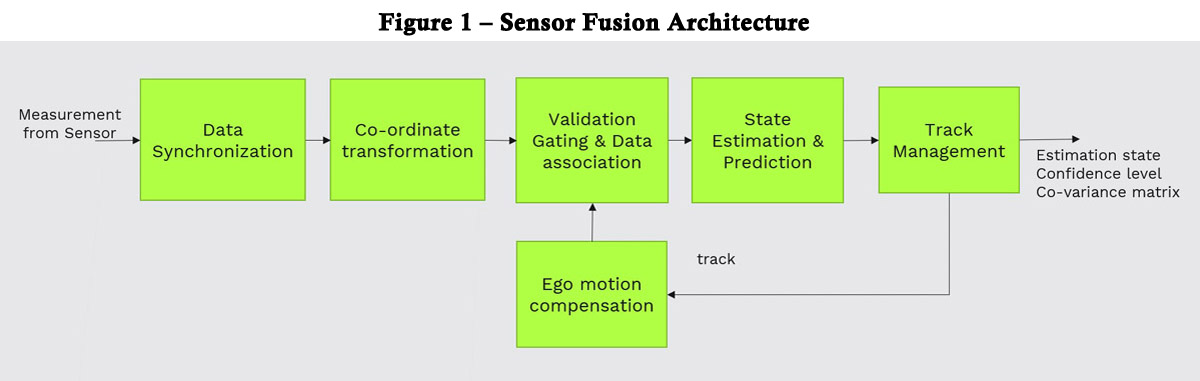

Below is an example a Sensor Fusion architecture that can be used to ensure highest levels of Accuracy for Object Detection. There can be different architectures depending on the type of fusion, i.e., High Level Fusion, raw Sensor Fusion, etc.

Data Synchronization:- Different sensors give different outputs, e.g., camera gives output at 50 milli seconds and radar could be giving output at 60 milliseconds. The Data Synchronization technique synchronizes this data before Sensor Fusion is execute

Coordinate Transformation:- This is a technique based on geometry and placement of sensors to bring data from Sensors mounted at different locations to one common frame. For example, if we consider a car that is 5 meters long and 2 meters wide versus a truck that is 18 meters long and 4 meters wide, we will need to bring data to rear axle or front axle frame

Validation Gating and Data Association: This is a technique to ensure that multiple data received from each of the sensors on the vehicle is for the same object, e.g., a car in front

State estimation and predictions: Estimate the state.

Track Management: Track management technique ensures the final output of sensor fusion, it initialize, maintain, and delete track based on track history and it also calculate track confidence. Ego Motion Estimation: it accounts for the movement of the vehicle. For example, data is received in T+100 milliseconds and T+200 milliseconds but during this time the vehicle has already moved. This technique accounts for this change in location or movement of the vehicle.

Thus, to achieve high quality performance of Sensor Fusion, there are some key aspects of design that must be considered:

- Selection of algorithms for data association and estimation technique

- There are many algorithms available for data association the popular algorithms are nearest neighbor, probabilistic, and joint probabilistic data association technique.

- The various popular estimation techniques like linear Kalman filter, Extended Kalman filter, particle filter etc.

- The fusion strategy

- The data association algorithm and estimation technique shall be decided based on type of sensor used for fusion, state estimation requirement (dynamic/static object estimation) and sensor outputs.

- Track Management:

- To reduce false output, fusion track management needs to build confidence before initialize track however if it is taken more time then it will lead to latency which will delay in action by AD system which is not good for AD system performance. The track initialization strategy shall be decided considering operating environment, feature ODD, etc. The same is case for track deletion

- Filter tuning

- Filter tuning very critical for filter performance. The practical aspect needs to be considered for the filter tuning and this can be done using sensor characterization using real world data

It is also important to validate fusion to ensure software quality and scenario covers. However, Validation can lead to millions of scenarios, so it is always important to use right validation strategy to test fusion with Simulated data, real world data or in vehicle testing. Following are the key aspect for the Fusion validation

- Edge case Scenario Selection: As fusion improve the perception improve performance by fusing various sensor noisy data, so scenarios shall be carefully selected which covers all edge cases. The scenario shall also mention which level of validation is required whether at simulation, real world or in vehicle. The objective shall be maximum coverage at simulation level to reduce cost and time at the same time there shall not be any compromise in quality of test.

- Sensor modelling: To validate fusion in simulation with environment noise effect high fidelity sensor model is required which mimic environment effect on sensor performance. The high-fidelity sensor modelling is research topic and there are various techniques are proposing like data driven model or physics-based modelling. The right sensor model is very critical for simulation.

- Validation with real world data: The high-fidelity sensor model can model environment conditions up to 90 to 95% accuracy, so all scenarios are not possible to cover in simulation and fusion need to be validated with real world data.

- Vehicle testing: The final validation of fusion shall be on vehicle to ensure end to end testing at feature level considering actual conditions, sensor latency and actuation delay

So fusion is very critical perception component for AD performance, and one must consider key practical aspect and define right strategy for design as well as for validation to achieve highest level of maturity of AD software.

At KPIT we have been working on fusion since past 7 years, we have invested and developed very robust design as well as validation strategy to ensure the highest level of software quality. The design takes care of variant handling for multi sensor fusion (radar+radar, Camera+Radar, Camear+Radar+Lidar), different sensor topology and layout, sensor characteristics as well as sensor degradation. We have filed multiple patents for various fusion technique. We have been supporting various OEMs and Tier-1 customer for various fusion projects for different model year programs.

About the author

Prashant Vora is Senior Practice Director – Autonomous Driving at KPIT Technologies Ltd. He has around 21+ years of experience in algorithm and software development, verification and validation for aerospace, automotive domain and is leading the feature development and chassis applications in the autonomous driving practice at KPIT.